SCS

0.0200

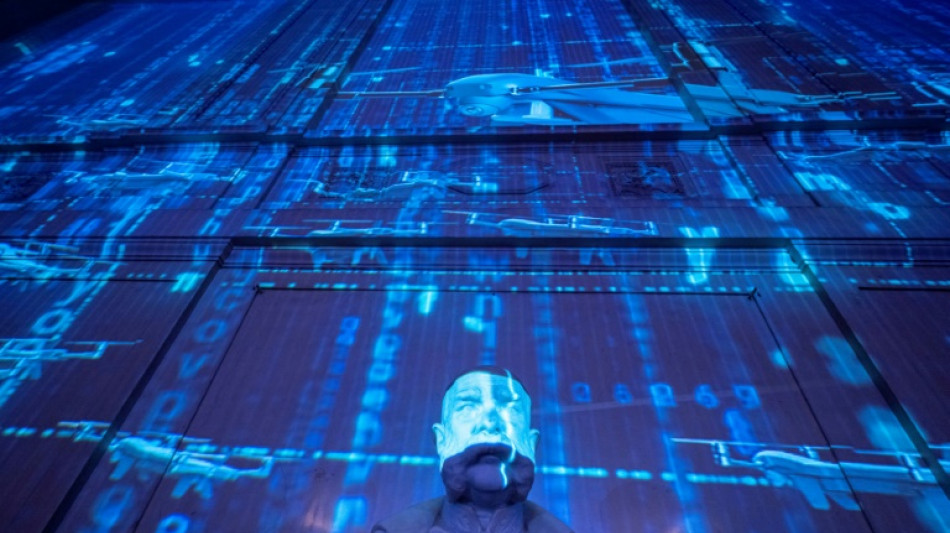

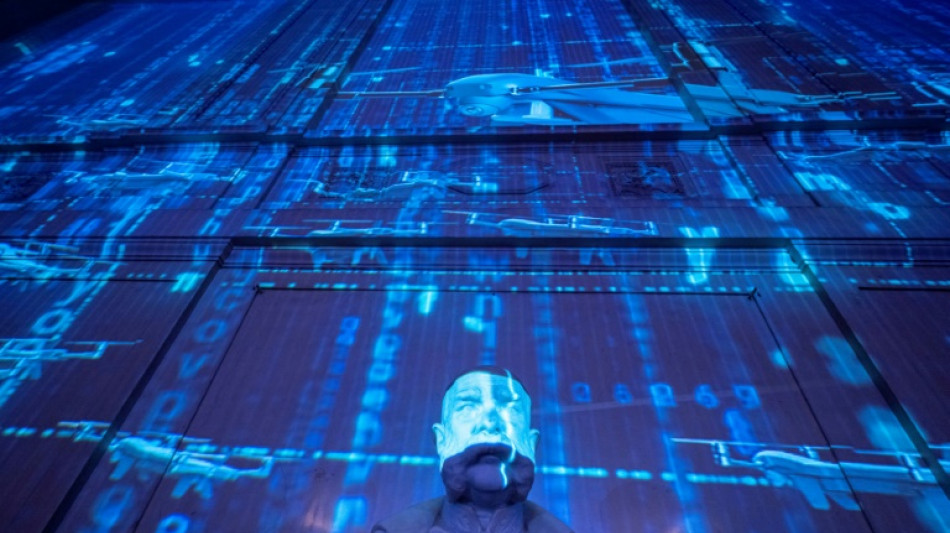

Hype is growing from leaders of major AI companies that "strong" computer intelligence will imminently outstrip humans, but many researchers in the field see the claims as marketing spin.

The belief that human-or-better intelligence -- often called "artificial general intelligence" (AGI) -- will emerge from current machine-learning techniques fuels hypotheses for the future ranging from machine-delivered hyperabundance to human extinction.

"Systems that start to point to AGI are coming into view," OpenAI chief Sam Altman wrote in a blog post last month. Anthropic's Dario Amodei has said the milestone "could come as early as 2026".

Such predictions help justify the hundreds of billions of dollars being poured into computing hardware and the energy supplies to run it.

Others, though are more sceptical.

Meta's chief AI scientist Yann LeCun told AFP last month that "we are not going to get to human-level AI by just scaling up LLMs" -- the large language models behind current systems like ChatGPT or Claude.

LeCun's view appears backed by a majority of academics in the field.

Over three-quarters of respondents to a recent survey by the US-based Association for the Advancement of Artificial Intelligence (AAAI) agreed that "scaling up current approaches" was unlikely to produce AGI.

- 'Genie out of the bottle' -

Some academics believe that many of the companies' claims, which bosses have at times flanked with warnings about AGI's dangers for mankind, are a strategy to capture attention.

Businesses have "made these big investments, and they have to pay off," said Kristian Kersting, a leading researcher at the Technical University of Darmstadt in Germany and AAAI member.

"They just say, 'this is so dangerous that only I can operate it, in fact I myself am afraid but we've already let the genie out of the bottle, so I'm going to sacrifice myself on your behalf -- but then you're dependent on me'."

Scepticism among academic researchers is not total, with prominent figures like Nobel-winning physicist Geoffrey Hinton or 2018 Turing Prize winner Yoshua Bengio warning about dangers from powerful AI.

"It's a bit like Goethe's 'The Sorcerer's Apprentice', you have something you suddenly can't control any more," Kersting said -- referring to a poem in which a would-be sorcerer loses control of a broom he has enchanted to do his chores.

A similar, more recent thought experiment is the "paperclip maximiser".

This imagined AI would pursue its goal of making paperclips so single-mindedly that it would turn Earth and ultimately all matter in the universe into paperclips or paperclip-making machines -- having first got rid of human beings that it judged might hinder its progress by switching it off.

While not "evil" as such, the maximiser would fall fatally short on what thinkers in the field call "alignment" of AI with human objectives and values.

Kersting said he "can understand" such fears -- while suggesting that "human intelligence, its diversity and quality is so outstanding that it will take a long time, if ever" for computers to match it.

He is far more concerned with near-term harms from already-existing AI, such as discrimination in cases where it interacts with humans.

- 'Biggest thing ever' -

The apparently stark gulf in outlook between academics and AI industry leaders may simply reflect people's attitudes as they pick a career path, suggested Sean O hEigeartaigh, director of the AI: Futures and Responsibility programme at Britain's Cambridge University.

"If you are very optimistic about how powerful the present techniques are, you're probably more likely to go and work at one of the companies that's putting a lot of resource into trying to make it happen," he said.

Even if Altman and Amodei may be "quite optimistic" about rapid timescales and AGI emerges much later, "we should be thinking about this and taking it seriously, because it would be the biggest thing that would ever happen," O hEigeartaigh added.

"If it were anything else... a chance that aliens would arrive by 2030 or that there'd be another giant pandemic or something, we'd put some time into planning for it".

The challenge can lie in communicating these ideas to politicians and the public.

Talk of super-AI "does instantly create this sort of immune reaction... it sounds like science fiction," O hEigeartaigh said.

Y.Havel--TPP